THE RISE OF BEHAVIORAL SURVEILLANCE

by Tobin M. Albanese

Abstract. Behavioral surveillance transforms ambient signals—movement, clicks, proximity, purchases—into inferences that shape real outcomes. This essay maps the pipeline from collection to consequence, catalogs failure modes, and proposes governance patterns centered on purpose limitation, minimization, transparency, and contestability.

Executive Summary

- Pipeline clarity: collection → linkage → enrichment → inference → action.

- Primary risks: purpose creep, chilling effects, disparate error, re-identification via linkage.

- Guardrails: tight purpose statements, aggressive minimization, retention TTLs, DP-backed reporting, independent oversight.

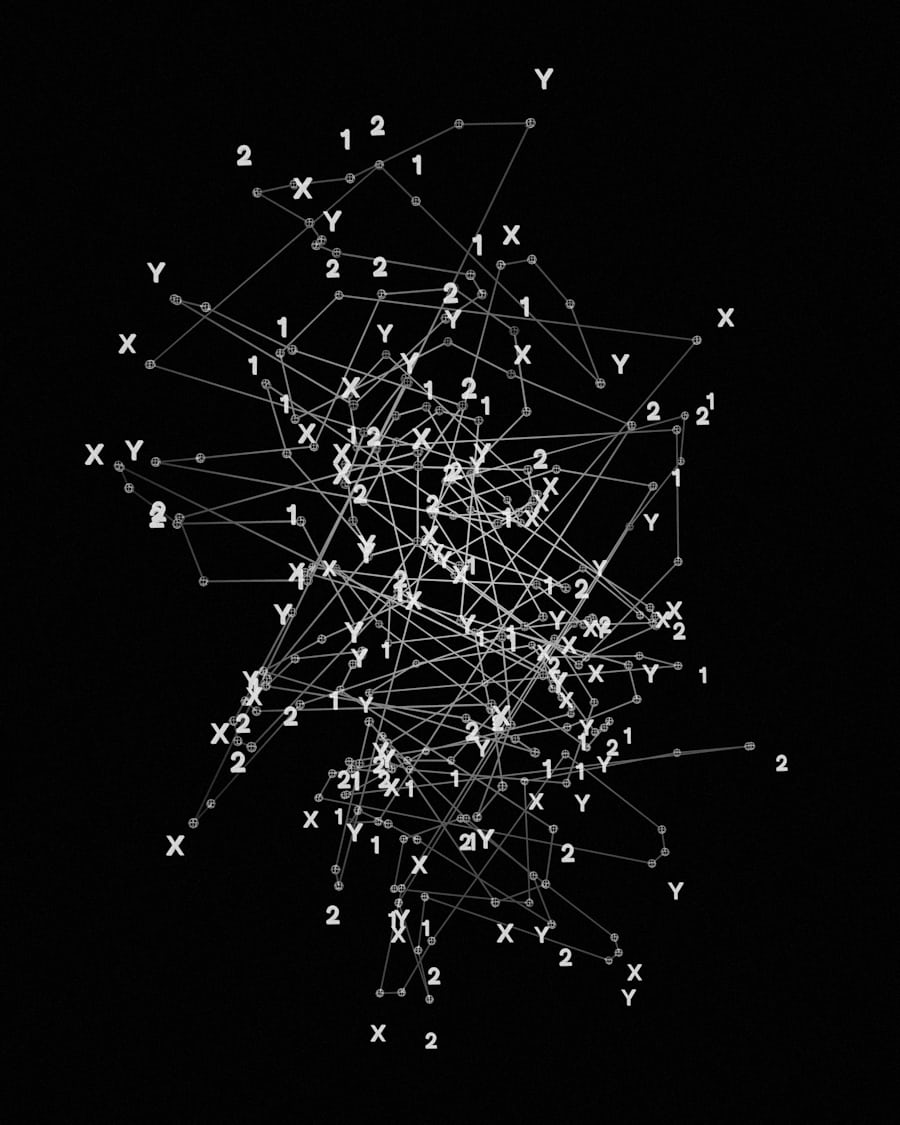

Taxonomy of Signals & Sources

- Device & network: location pings, Wi-Fi/Bluetooth telemetry, traffic metadata.

- Platform exhaust: clickstreams, dwell times, search terms, recommendation traces.

- Physical sensors: cameras (counts, not faces), badge swipes, environmental sensors.

- Commercial brokers: purchased segments with uncertain provenance—highest re-ID risk.

Each source carries different consent, accuracy, and linkage properties; conflation is where many harms begin.

From Signals to Decisions

- Collection: define lawful basis; exclude sensitive categories by policy and enforcement.

- Linkage: join keys, embeddings, or fuzzy matching; quantify re-ID risk explicitly.

- Enrichment: derive features; document transformations; avoid proxy discrimination.

- Inference: clustering, anomaly detection, propensity, risk scoring—with calibration checks.

- Action: interventions, routing, enforcement; ensure due process and human review where rights are implicated.

Risk & Civil Liberties

- Purpose creep: secondary uses without renewed consent/authorization.

- Chilling effects: measurable behavior changes (assembly, speech) due to perceived surveillance.

- Disparate error: uneven FPR/FNR across groups; harms compound with feedback loops.

- Singling-out & linkability: individual traceability through dataset joins.

Guardrails should be built where harm materializes: at linkage, inference, and action—not only at collection.

Design Patterns for Guardrails

- Purpose limitation: a binding, testable purpose clause; new purposes require a fresh DPIA.

- Data minimization: collect the least precise, lowest granularity signal that still meets mission need.

- Edge processing: prefer on-device aggregation; transmit only aggregates or alerts.

- Privacy-preserving analytics: differential privacy for reports; secure enclaves or MPC for joins when needed.

- Separation of duties: data stewards distinct from policy decision-makers; approvals logged.

- Retention budgets: default TTLs with auto-deletion; no indefinite “just in case.”

- Transparency & contestability: affected parties can understand, appeal, and correct outcomes.

# policy.yaml (excerpt)

purpose: "crowd safety analytics"

forbidden_uses: ["discipline","unrelated_investigations"]

inputs:

- camera_counts # no identity capture

- gate_badges # hashed, rotated keys

retention_days: 14

aggregation: { grid: "500m", interval: "15m" }

privacy_reporting: { dp_epsilon_monthly: 2.0 }

Evaluation, Fairness, and Shift

- Operating point rationale: choose thresholds using harm-weighted error costs.

- Calibration: reliability diagrams per subgroup; apply post-hoc fixes if necessary.

- Shift tests: base-rate drift, seasonality, covariate shift; pre-commit adaptation rules.

- Privacy attacks: test for membership inference and linkage leakage.

Oversight & Accountability

- DPIA: assess necessity, proportionality, safeguards, residual risk, and alternatives.

- Independent review: periodic external audits; publish summaries.

- Transparency reports: usage counts, overrides, complaints, policy changes.

- Appeals: documented path for individuals to challenge outcomes.

Case Sketches (Anonymized)

- Transit surge sensing: counts improved staffing but required coarse grids and strict TTLs to avoid tracking.

- Campus anomaly alerts: initial false positives clustered in under-lit areas; calibration + signage reduced harm.

Research Agenda

- Operational measures of chill and practical thresholds for intervention.

- Shift-robust calibration that honors retention limits.

- Public audit formats that preserve privacy while delivering accountability.

Forthcoming ResearchGate preprint link will be added here.

Implementation Checklist

- Purpose statement and forbidden uses approved.

- Minimization & aggregation documented; DPIA completed.

- Subgroup calibration verified; thresholds justified.

- Retention TTLs enforced; transparency report cadence set.

- Appeals workflow staffed; audit log schema deployed.

Resources & Links

Technical & Governance References